August 2, 2025

Vietnamese Emotion Recognition from Voice and Text: A Confidence-Based Approach

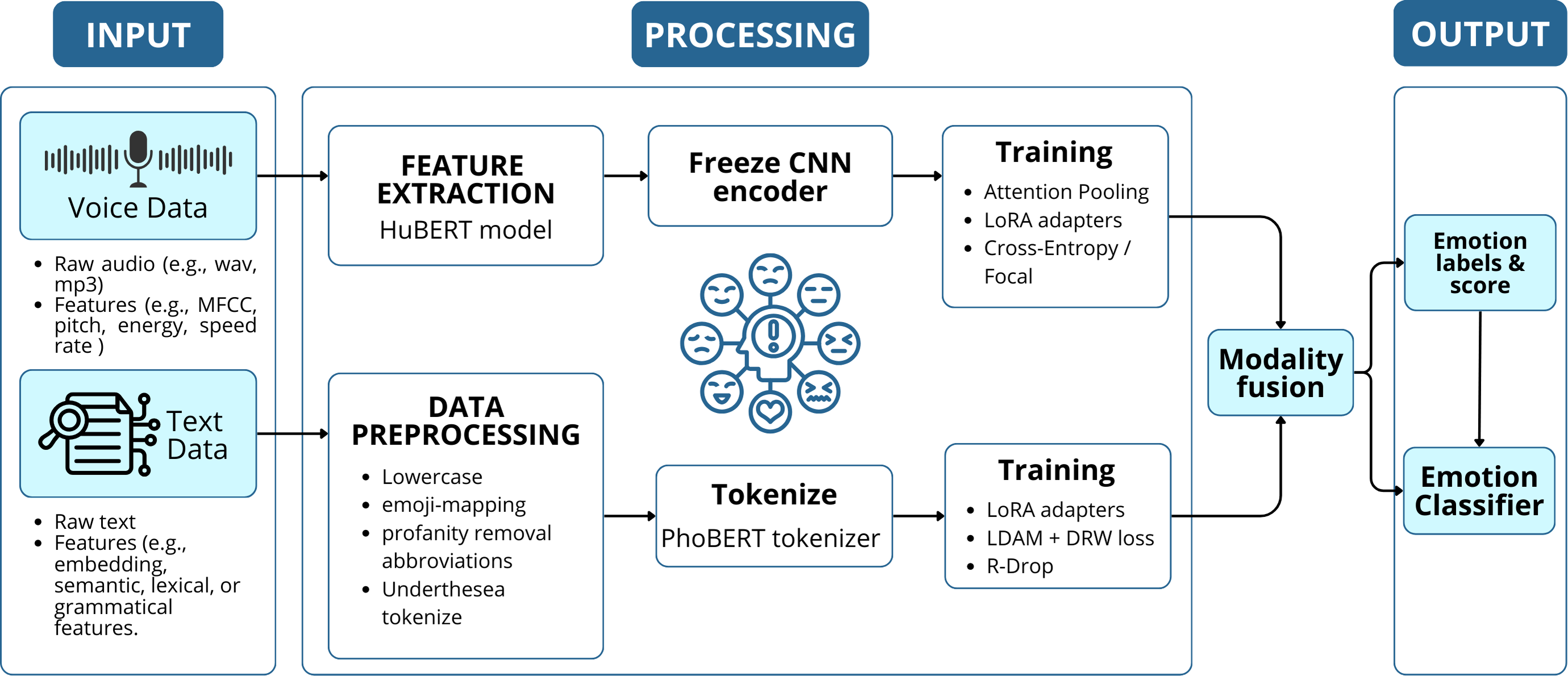

Accurate emotion recognition from human communication is pivotal for empathetic human–computer interaction, healthcare monitoring, and customer service. However, most systems either focus on speech or text, and few effectively integrate both, especially for underrepresented languages like Vietnamese. In this study, we propose a lightweight confidence-based multimodal fusion framework that combines a HuBERT-based voice classifier and a PhoBERT-v2 text classifier fine-tuned with LDAM loss and Deferred Re-Weighting (DRW). Each branch produces posterior probabilities, and the final prediction selects the modality with higher confidence, avoiding complex fusion networks and preserving real-time performance. On Vietnamese benchmarks, the text model achieves 87.35% accuracy and an F1-score of 87.28% on an extended UIT-VSMEC corpus, while the speech model attains 82.06% accuracy and an F1-score of 81.93% on the Bud500 dataset. The proposed fusion strategy shows promising results, boosting macro-F1 compared to single-modality baselines. Our results demonstrate that simple, confidence-driven fusion is highly effective for Vietnamese emotion-aware applications, offering a practical path for deployment in resource-constrained and real-time settings.

Accepted by TNU Journal of Science and Technology, The 8th International Conference on Multimedia Analysis and Pattern Recognition (MAPR), XVIII NATIONAL SCIENCE CONFERENCE BASIC RESEARCH AND APPLICATION OF INFORMATION TECHNOLOGY "DIGITAL TRANSFORMATION AND FUTURE TRENDS" (Fair 2025)

Member: Ngoc Tram Huynh Thi, Duc Dat Pham, Tan Duy Le, Kha-Tu Huynh